Python Scripts

Python Scripts & Bash Automation

My programming portfolio highlights a diverse range of projects that demonstrate my technical proficiency and innovative approach to problem-solving. By looking into the power of Python and Bash scripting, I’ve addressed challenges in data analysis, workflow automation, and artificial intelligence, delivering impactful solutions for real-world applications.

What You’ll Find Here:

Data Analysis: Cleaning, transforming, and visualizing data to uncover meaningful insights.

Data Scraping: Extracting and organizing valuable datasets using Python tools like Pandas, and Scikit-learn

AI & Automation: Designing AI pipelines with PyTorch and Hugging Face to automate workflows and solve real-world problems.

Bash Scripting: Streamlining system-level tasks and enhancing efficiency by integrating Bash and Python workflows.

Each project reflects my ability to bridge data, technology, and business needs.

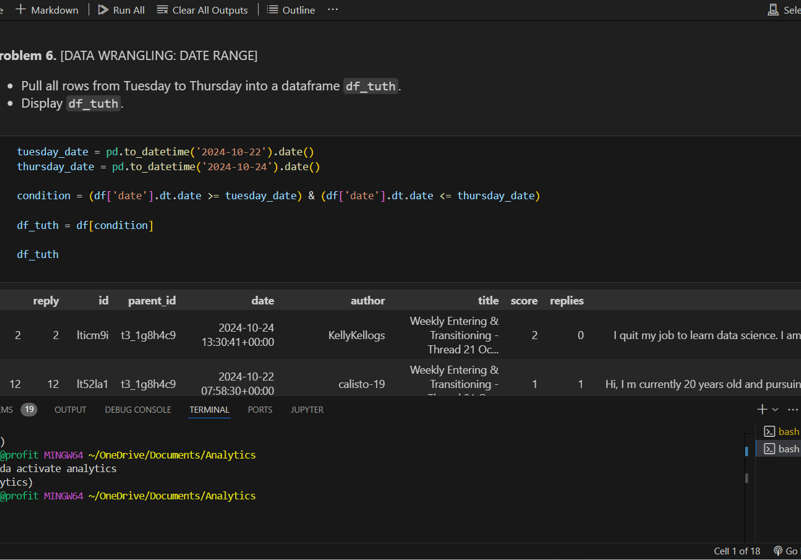

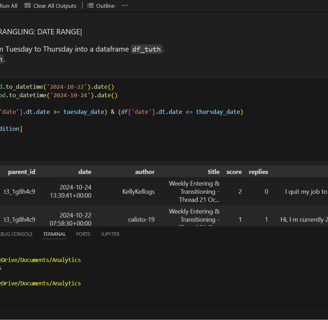

Data Wrangling & Analysis

This project demonstrates my ability to scrape data using API keys, manipulate and filter datasets using Python for effective data analysis.

Utilized Pandas to filter and extract data within a specific date range (e.g., Tuesday to Thursday) for actionable insights.

Ensured clean and structured datasets for downstream analysis, showcasing proficiency in Python's data manipulation libraries.

Integrated Bash scripting to activate and manage virtual environments, streamlining workflows and ensuring efficient execution.

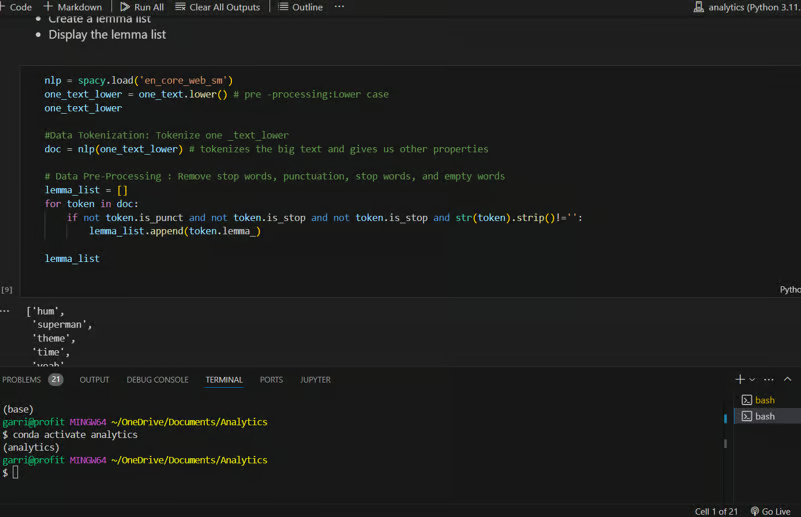

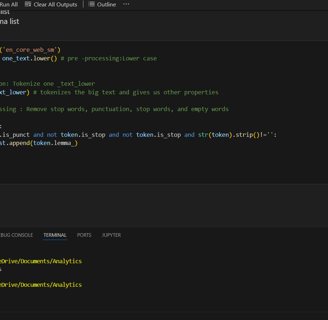

Natural Language Processing (NLP): Lemmatization and Text Preprocessing

Objective: To preprocess raw text data by normalizing, tokenizing, and creating a lemma list for further analysis.

Steps Taken:

Lowercasing: Standardized text by converting it to lowercase for uniformity.

Tokenization: Split the text into tokens (words) using spaCy, enabling further analysis.

Stopword and Punctuation Removal: Filtered out irrelevant words (e.g., "and," "the") and punctuation to focus on meaningful data.

Lemmatization: Reduced words to their root forms (e.g., "running" → "run"), simplifying data for modeling.

Tools Used:

Python: For scripting and automation.

spaCy: An advanced NLP library for tokenization and lemmatization.

Impact: Prepared clean and normalized text data, suitable for downstream tasks like sentiment analysis, classification, or topic modeling.

Learn more: Ask me more about how I applied NLP techniques like tokenization and lemmatization to streamline text data for analysis.

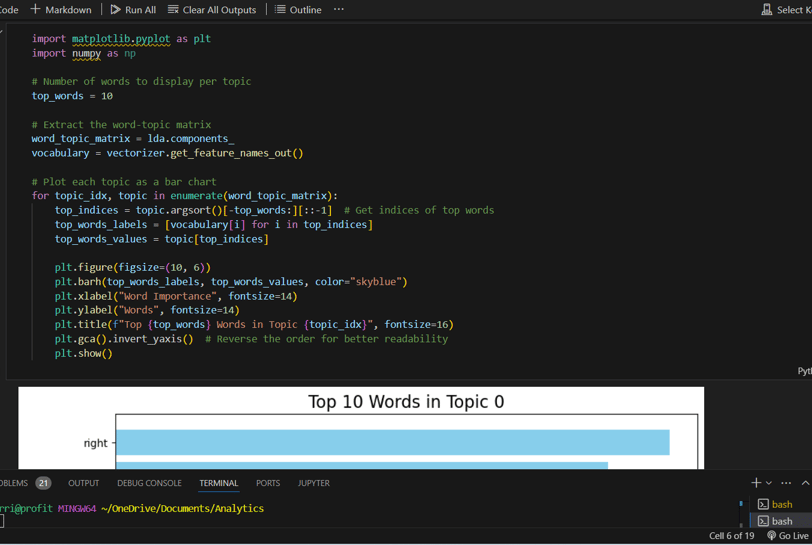

Topic Modeling and Visualization

Objective: Identify and visualize the most important words within a topic using Latent Dirichlet Allocation (LDA).

Steps Taken:

Generated a word-topic matrix using LDA to extract and rank the top words for each topic.

Designed a bar chart visualization for the top 10 words in each topic, improving interpretability of the topic modeling results.

Utilized NumPy for data manipulation and Matplotlib for clean and visually appealing plots.

Tools Used:

Python: Core scripting language.

Scikit-learn: For LDA and other machine learning tasks.

Matplotlib: For visualization.

Impact: Provided insights into underlying themes within textual data, facilitating further analysis and decision-making.

Learn more: Feel free to ask me more about this project. I’d be happy to walk you through the process of topic modeling and explain how it can extract meaningful insights from large text datasets.